Is the risk to privacy caused by ChatGPT as bad as it seems?

Since its launch, ChatGPT and the possibilities that AI brings have been hotly debated.

Whilst many wondered at this almost limitless tool that will magnify human intellect, concerns have been raised as to the largely unquantified risks that these intelligent learning platforms present.

Last month, Italy made a stand against ChatGPT, becoming the first Western nation to ban the platform “until ChatGPT respects privacy”.

So what are the privacy implications of these new tools, and how worried should we be about them?

ChatGPT's privacy black hole

Given the indiscriminate way in which ChatGPT gathers data, it can draw on a huge range of source material, including social media, blog posts, product reviews, chat forums and even email threads if publicly available.

This means that both personal information and data are being used without people’s knowledge or consent.

If you sign up to ChatGPT, you’re agreeing to a privacy policy that allows your IP address, browser type and settings all be stored, not to mention all data interactions you have with ChatGPT, and your wider internet browsing activity.

All of this can be shared with unspecified third parties “without further notice to you”.

By analysing your conversations with it alongside your other online activity, ChatGPT develops a profile of each user’s interests, beliefs, and concerns.

This is also true of today’s search engines. But as an “intelligent” learning platform, ChatGPT has the potential to engage with both the user and the information it is given in a completely new way, creating a dialogue that might fool you into thinking you are speaking with another human, not an AI system.

Bots can get things wrong, too

ChatGPT draws all these inputs together and analyses them on a scale not previously possible in order to “answer anything”.

If asked the right question, it can easily expose the personal information of both its users and of anyone who has either posted or been mentioned on the internet.

Without an individual’s consent, it could disclose political beliefs or sexual orientation and could mean embarrassing or even career-ruining information is released.

ChatGPT’s proven tendency to get things wrong and even make things up could lead to damaging and untrue allegations.

Some people will nonetheless believe them and spread false statements further in the belief that the chatbot has uncovered previously withheld and secret information.

Could basic safeguards tackle these issues?

Given the power of these machine learning systems, it’s difficult to build even basic safeguards into their programming.

Their entire premise is that they can analyze huge amounts of data, searching all corners of what is publicly available online and drawing conclusions from it very quickly.

There is no way to detect when the chatbot is collecting data without someone’s knowledge or consent, and without sources, there is no opportunity to check the reliability of the information you are fed.

We’ve seen the ease with which people have already managed to “jailbreak” current safeguards, giving little hope that any further rules built into the platforms won’t also be able to be circum-navigated.

Privacy laws are not keeping up with the pace of inventions

Privacy laws have a lot of catching up to do in order to keep up with this new threat, the full extent of which we haven’t yet seen.The way in which ChatGPT and others are using our data is already a clear violation of privacy, especially when it is sensitive and can be used to identify us.

Contextual integrity, a core principle of existing privacy laws, states that even when someone’s information or data is publicly available, it still shouldn't be revealed outside of its original context. This is another rule ignored by ChatGPT.

We have barely even touched on the data protection infringements inherent in the way AI chatbots learn.

There are currently no procedures for individuals to check what personal information on them is being stored or request for it to be deleted as you would with other companies.

Nor have we given consent for this data to be stored in the first place - simply because it exists somewhere on the internet should not give ChatGPT the right to use it.

How can we protect our privacy in this new era of artificial intelligence?

Private Internet Access has been closely monitoring the privacy risks inherent in ChatGPT and other AI platforms.

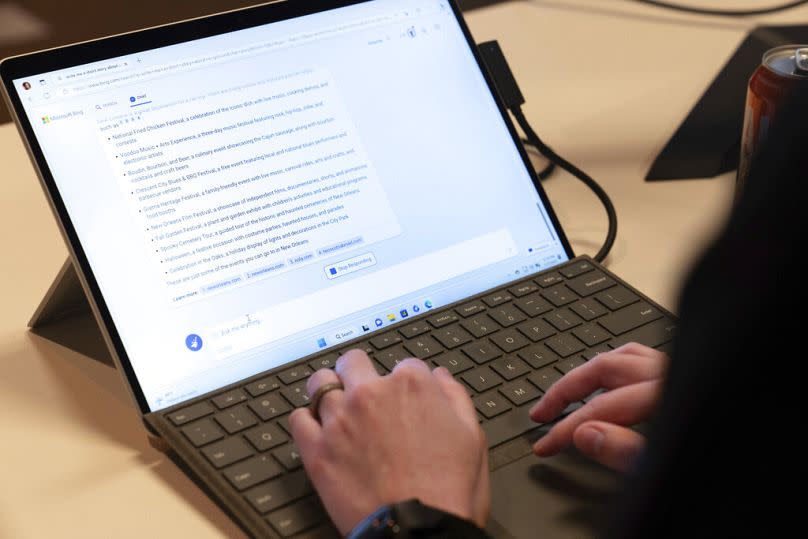

With many competitors hotly chasing OpenAI’s lead, including Microsoft Bing, Google Bard and Chinese tech giant Baidu’s Ernie, and within a sector that is almost completely unregulated, the privacy and security implications are only growing.

Whilst embracing AI’s potential, we must be vigilant of the privacy threat it presents. The laws and regulations protecting our privacy need to adapt.

As individuals, we need to take steps to protect our data and keep our personal information safe.

This includes thinking about exactly what we are happy to share online and knowing how easily a machine-learning platform can now find, extract and share this information with anyone.

Ultimately, we need to be wary of how much trust we put in this new technology, questioning rather than blindly accepting the answers we are presented with.

Jose Blaya is the Director of Engineering at Private Internet Access. He is a tech engineering expert with a strong background in cybersecurity and mobile platforms and a global advocate for digital privacy and freedom.

_At Euronews, we believe all views matter. Contact us at view@euronews.com to send pitches or submissions and be part of the conversation.

_

Yahoo Movies

Yahoo Movies