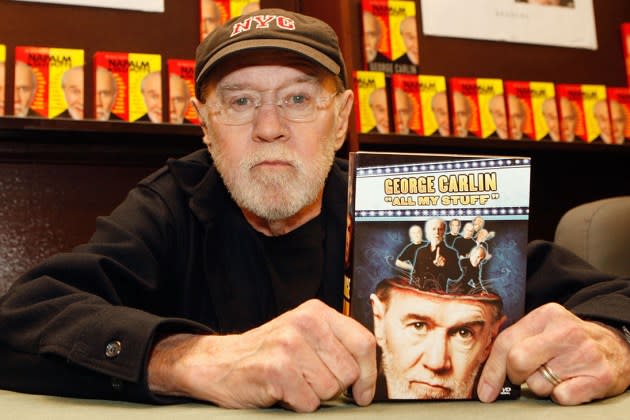

George Carlin’s Estate Settles Lawsuit Against Podcasters Over AI Special

A settlement has been reached between the estate of George Carlin and the makers of a podcast who used generative artificial intelligence to impersonate the late stand-up comic’s voice and style for an unauthorized special.

Will Sasso and Chad Kultgen, hosts of the podcast Dudesy, and George Carlin’s estate notified the court on Tuesday of an agreement to resolve the case. Under the deal, an injunction will be entered barring further use of the video, which has already been taken down, and that it was made in violation of the comic’s rights, says Josh Schiller, a lawyer for the estate. Further terms of the agreement weren’t disclosed. Schiller declined to comment on whether there were monetary damages.

More from The Hollywood Reporter

Christopher Durang, Playwright and Tony Winner for 'Vanya and Sonia and Masha and Spike,' Dies at 75

The settlement marks what’s believed to be the first resolution to a lawsuit over the misappropriation of a celebrity’s voice or likeness using AI tools. It comes as Hollywood is sounding the alarm over utilization of the tech to exploit the personal brands of actors, musicians and comics, among others, without consent or compensation.

“This sends a message that you have to very careful about how you use AI technology,” Schiller says, “and to be respectful of peoples’ hard work and good will.” He adds the deal will “serve as a blueprint for resolving similar disputes going forward where an artist or public figure has their rights infringed by AI technology.”

Author and producer Kelly Carlin, daughter of George Carlin, said in a statement that “this case serves as a warning about the dangers posed by AI technologies and the need for appropriate safeguards not just for artists and creatives, but every human on earth.”

The legal battle stems from an hourlong special, titled George Carlin: I’m Glad I’m Dead, that was released in January on the podcast’s YouTube channel. In the episode, an AI-generated George Carlin, imitating the comedian’s signature style and cadence, narrates commentary over images created by AI and tackles modern topics such as the prevalence of reality TV, streaming services and AI itself.

The podcast is self-described as a “first of its kind media experiment,” with the show’s premise revolving around using an AI program called “Dudesy AI” — which has access to most of the host’s personal records, including text messages, social media accounts and browsing histories — to write episodes in the style of Sasso and Kultgen.

The podcasters approached George Carlin’s estate with an offer to take the video down and agree not to republish it on any platform moving forward, Schiller says. He adds, “We wanted to move on from this quickly and honor [Carlin’s] legacy and restore that by getting rid of this.”

The lawsuit alleged copyright infringement for unauthorized use of the comedian’s copyrighted works.

At the start of the video, it’s explained that the AI program that created the special ingested five decades of George Carlin’s original stand-up routines, which are owned by the comedian’s estate, as training materials.

The complaint also alleged violations of right of publicity laws for use of George Carlin’s name and likeness. It pointed to the promotion of the special as an AI-generated George Carlin installment, where the deceased comedian was “resurrected” with the use of AI tools.

The special wasn’t the first time Dudesy used the AI to impersonate a celebrity. Last year, Sasso and Kultgen released an episode featuring an AI-generated Tom Brady performing a stand-up routine. It was taken down after the duo received a cease-and-desist letter.

In the absence of federal laws covering the use of AI to mimic a person’s likeness or voice, a patchwork of state laws have filled the void. Still, there’s little recourse for those in states that have not passed such protections, which has prompted lobbying from Hollywood.

That spurred a bipartisan coalition of House lawmakers to introduce in January a long-awaited bill to prohibit the publication and distribution of unauthorized digital replicas, including deepfakes and voice clones. The legislation is intended to give individuals the exclusive right to approve the use of their image, voice and visual likeness by conferring intellectual property rights at the federal level. Under the bill, unauthorized uses would be subject to stiff penalties and lawsuits would be able to be brought by any person or group whose exclusive rights were impacted.

In March, Tennessee became the first state to pass legislation specifically targeted at protecting musicians from unauthorized use of AI to mimic their voices without permission. The Ensuring Likeness Voice and Image Security Act, or ELVIS Act, builds upon the state’s old right of publicity law by adding an individual’s “voice” to the realm it protects. California has yet to update its statute.

Tuesday’s deal arrives on the heels of OpenAI preparing to launch a new tool that can re-create a person’s voice from a 15-second recording. When given a recording and text, it can read back that text in the voice from the recording. The Sam Altman-led outfit said it’s not releasing the technology to better understand potential harms, like using it to spread misinformation and impersonate people to facilitate scams.

Amid the rise in AI voice mimicry tools, there’s discussion of whether platforms that host infringing content should be subject to liability. Under the Digital Millennium Copyright Act, platforms like YouTube can avail themselves of certain safe harbor provisions as long as they take certain steps to take such potentially infringing content down. Artist advocacy groups have called for revisions to the law.

“This is not a problem that will go away by itself,” Schiller said in a statement. “It must be confronted with swift, forceful action in the courts, and the AI software companies whose technology is being weaponized must also bear some measure of accountability.”

Best of The Hollywood Reporter

Yahoo Movies

Yahoo Movies